What is the definition of a dark Angle? How to correct in embedded vision applications?

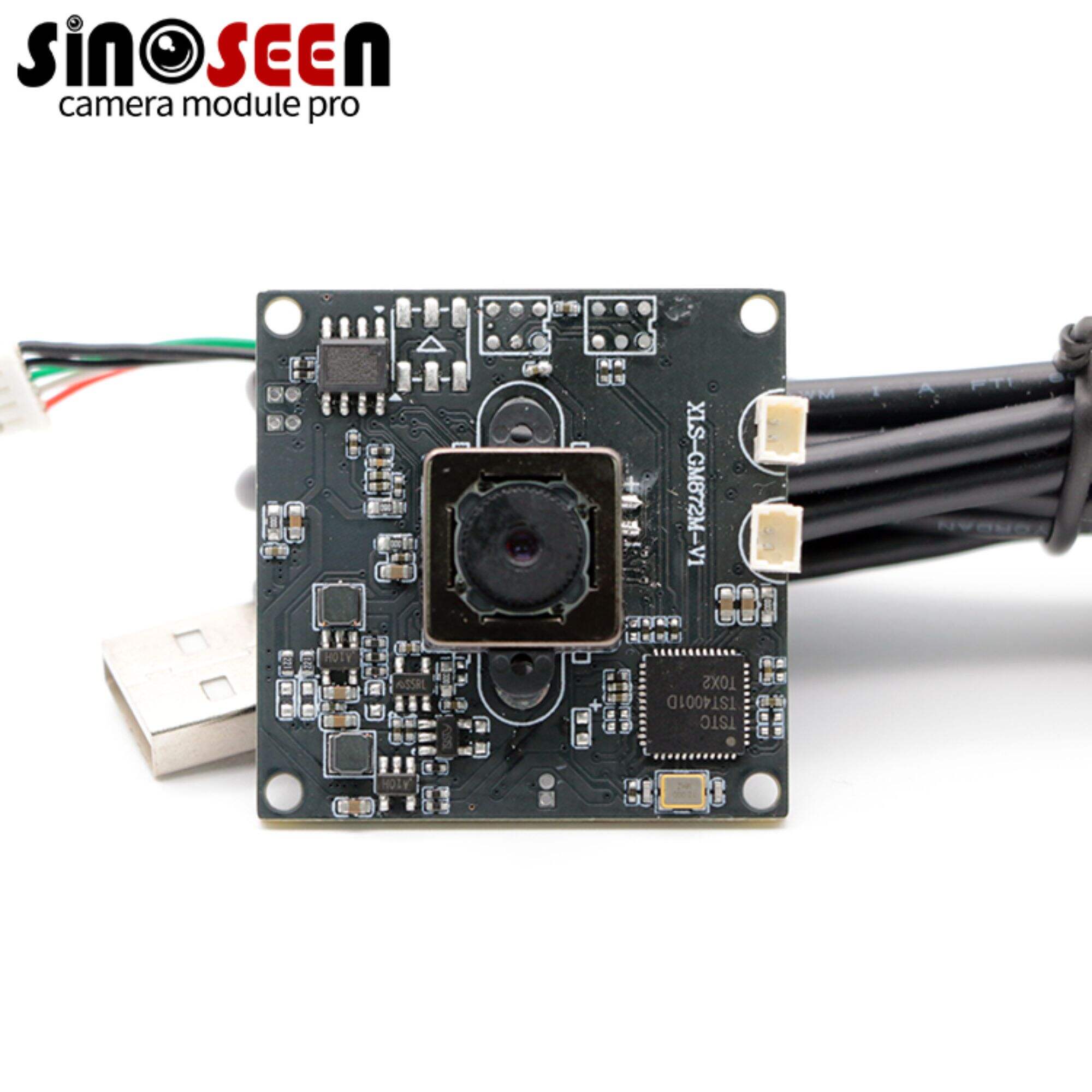

The rapid advancement of image processing platforms has revolutionized the field, offering reliable and cost-effective embedded vision solutions across various markets. These platforms enhance image quality through techniques such as enhancement, restoration, encoding, and compression, which include correcting illumination, resizing images (digital zoom), edge detection, and evaluating segmentation algorithms. In these applications, CMOS image sensors have become the most commonly used type of image sensor, capturing light to form a pixel array that serves as the foundation for subsequent image processing.

However, selecting the right lens to integrate with the camera module for optimal image capture and processing is a challenging process. It involves determining the correct field of view (FOV), choosing between fixed focus or autofocus, and setting the working distance. Additionally, optical phenomena like lens vinnieting and white balance issues can interfere with image output, affecting the final visual quality.

In this article, we will delve into the concept of lens vignetting, analyze its causes, and provide practical solutions to help embedded camera users eliminate this image quality issue.

What is lens vignetting?

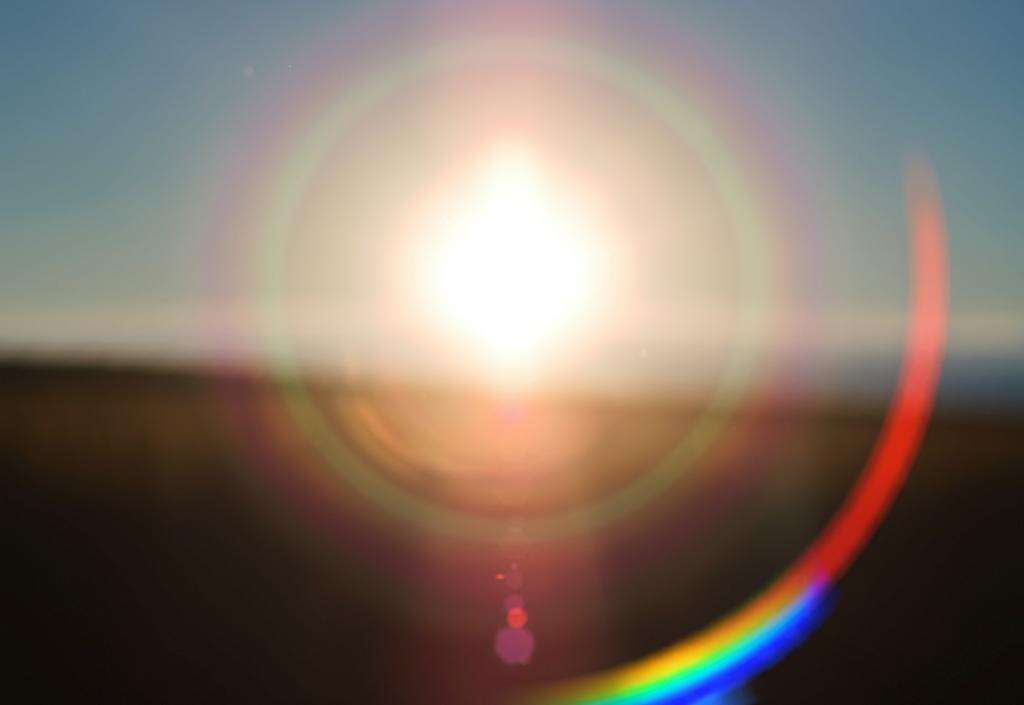

Lens vignetting refers to the gradual decrease in brightness or saturation from the center to the edges or corners of an image. Also known as shading, light fall-off, or luminance shading, the extent of vinnieting is typically measured in f-stops and depends on the lens aperture size and various design parameters.

The aperture controls the image brightness by altering the total amount of light reaching the camera sensor through the lens.

Vignetting not only affects the visual quality of an image but can also lead to the loss of crucial visual information in certain applications. For instance, in industrial inspection or medical imaging that requires precise color and brightness consistency, vignetting can result in misjudgments or inaccurate analysis. Therefore, understanding and taking measures to reduce or eliminate lens vignetting is essential for ensuring image quality and enhancing the performance of vision systems.

How is vignetting formed, and what types does it include?

why vignette?The occurrence of lens vignetting can be attributed to various factors, primarily due to the design of optical elements themselves. Obstructing light with external tools can exacerbate this phenomenon, and sometimes it is intentionally introduced during post-processing.

The different causes of lens vignetting include:

- Optical vignetting: This type occurs due to the physical limitations of the lens, preventing off-axis light from fully reaching the image sensor's edges, particularly evident in complex optical systems with multiple lens elements.

- Natural vignetting: Also known as cos4θ fall-off, it is a natural decrease in brightness due to the angle of light with respect to the image plane, following the cosine fourth law, where brightness drops rapidly as the angle with the optical axis increases.

- Chief Ray Angle (CRA): CRA is an important parameter when selecting lenses and sensors, affecting the brightness and clarity at the image edges. An excessive CRA can cause shadows at the image edges, impacting image quality.

- Mechanical vignetting: Occurs when the light beam is mechanically blocked by the lens mount, filter rings, or other objects, causing artificial brightness reduction at the image edges. This is common when the lens's image circle is smaller than the sensor size.

- Post-processing: Sometimes, for artistic effects or to highlight the central subject of an image, photographers intentionally add optical vignette during post-processing.

What are the methods to correct lens vignetting?

As discussed, lens vignetting is an undesirable optical phenomenon. Although it cannot be entirely avoided, it can be effectively corrected for embedded vision with the following measures:

- Matching CRA values: Ensuring the lens's CRA value is less than that of the sensor's microlens is crucial for eliminating imaging illumination or color issues. Manufacturers must check lens designs to match sensor layouts.

- Adjusting the Image Signal Processor (ISP): The ISP plays a significant role in processing images captured by the sensor. Specific procedures, such as Imatest, can test image quality and adjust specific registers in the ISP to mitigate lens shading.

- Increasing the f-stop number: By increasing the lens's f-stop number (i.e., reducing the aperture), the impact of natural vignetting or cos4θ fall-off can be reduced.

- Using a longer focal length: In cases of low f/# (ratio of focal length to aperture size), short focal length lenses, or when high resolution is needed at a low cost, mechanical camera vignetting can be eliminated by using a longer focal length.

- Flat-field correction: A common method for heavy lens vinettintg correction, it involves uniformly illuminating a flat surface and capturing dark field and light reference frames, then calculating and applying flat-field correction.

- Using software tools: Various software tools, such as microscopy image stitching tools and CamTool, can be used for lens shading correction.

- Using telecentric lenses: Lenses designed to be telecentric in image space can correct roll-off because this telecentricity produces extremely uniform image plane illumination, eliminating the normal cos4θ fall-off in image plane illumination from the optical axis to the corner of the field.

We hope this article has been helpful in addressing lens vignetting. Of course, if you still have questions about overcoming lens vignetting in embedded vision or if you wish to integrate embedded camera modules into your products, feel free to contact us—Sinoseen, an experienced Chinese camera module manufacturer.

EN

EN

AR

AR

DA

DA

NL

NL

FI

FI

FR

FR

DE

DE

EL

EL

HI

HI

IT

IT

JA

JA

KO

KO

NO

NO

PL

PL

PT

PT

RO

RO

RU

RU

ES

ES

SV

SV

TL

TL

IW

IW

ID

ID

SR

SR

VI

VI

HU

HU

TH

TH

TR

TR

FA

FA

MS

MS

IS

IS

AZ

AZ

UR

UR

BN

BN

HA

HA

LO

LO

MR

MR

MN

MN

PA

PA

MY

MY

SD

SD