What is Low latency camera Stream? What factors are involved?

Low-latency camera streaming refers to the time interval between capturing an image and sending it to the end-user's screen. Latency, or “lag”, is the time it takes for information to travel from one point to another. In video streaming, this translates into the time interval between capturing an image and sending it to the user's screen. It is well known that the higher the latency, the worse the video streaming experience will be due to intermittent delays. For example, in video conferencing platforms, high latency issues can affect the smoothness of conversations.

For embedded cameras, high latency can cripple the entire system, especially in self-driving vehicles that need to make decisions based on captured image and video data. So, with this post, we will come to take a deeper look at the basic concepts of low-latency camera streaming, and the factors that influence it.

How important is low-latency camera streaming? What exactly is it?

Low-latency camera streaming ensures that latency is made almost negligible when capturing, sharing, and receiving image information. While there is little uniformity in defining low latency rates, the industry has developed some standards that have been adopted by default.

For time-sensitive domains, high latency can cause embedded vision applications to become ineffective. Take, for example, real-time patient monitoring devices that rely on low latency streaming to share visual information captured by patient monitoring cameras in real time. Any delay in transmitting this information from the monitoring camera at the patient's bedside to the device used by the physician, clinician, or nurse could result in a life-threatening situation.

Additionally, low-latency camera streams are important for improving the user experience and reducing user experience gaps. It's more obvious that users who participate in online auctions, or use game streaming services have realized its benefits - as an extra second of latency can be irretrievable.

How does low latency camera streaming work?

Video streaming is a complex process that involves multiple steps, starting with a camera capturing live video, which is then processed, encoded, and ultimately transmitted to the end user. Here is a detailed breakdown of this process and how each step affects the overall latency.

- Video Capture: First, the camera captures live video. This step is the starting point for the entire process, and the performance of the camera has a direct impact on the quality and latency of the video stream. A high-quality camera captures images faster, providing the basis for a low-latency stream.

- Video Processing: Captured video is then processed, which may include denoising, color correction, resolution adjustments, and so on. The processing steps must be as efficient as possible to avoid introducing additional latency.

- Encoding: The processed video file is sent to an encoder for transcoding. Encoding is the process of converting the video into a format suitable for network transmission. Choosing the right encoder and encoding settings is critical to achieving low latency.

- Network transmission: The encoded video stream is transmitted over the network to the end user. This step is one of the main sources of latency, as network bandwidth, connection quality and routing efficiency all affect the speed of data transfer.

- Decoding and Display: Finally, the end-user's device decodes the video stream and displays it on the screen. The decoding process must be fast and efficient to ensure that the video can be played in real time.

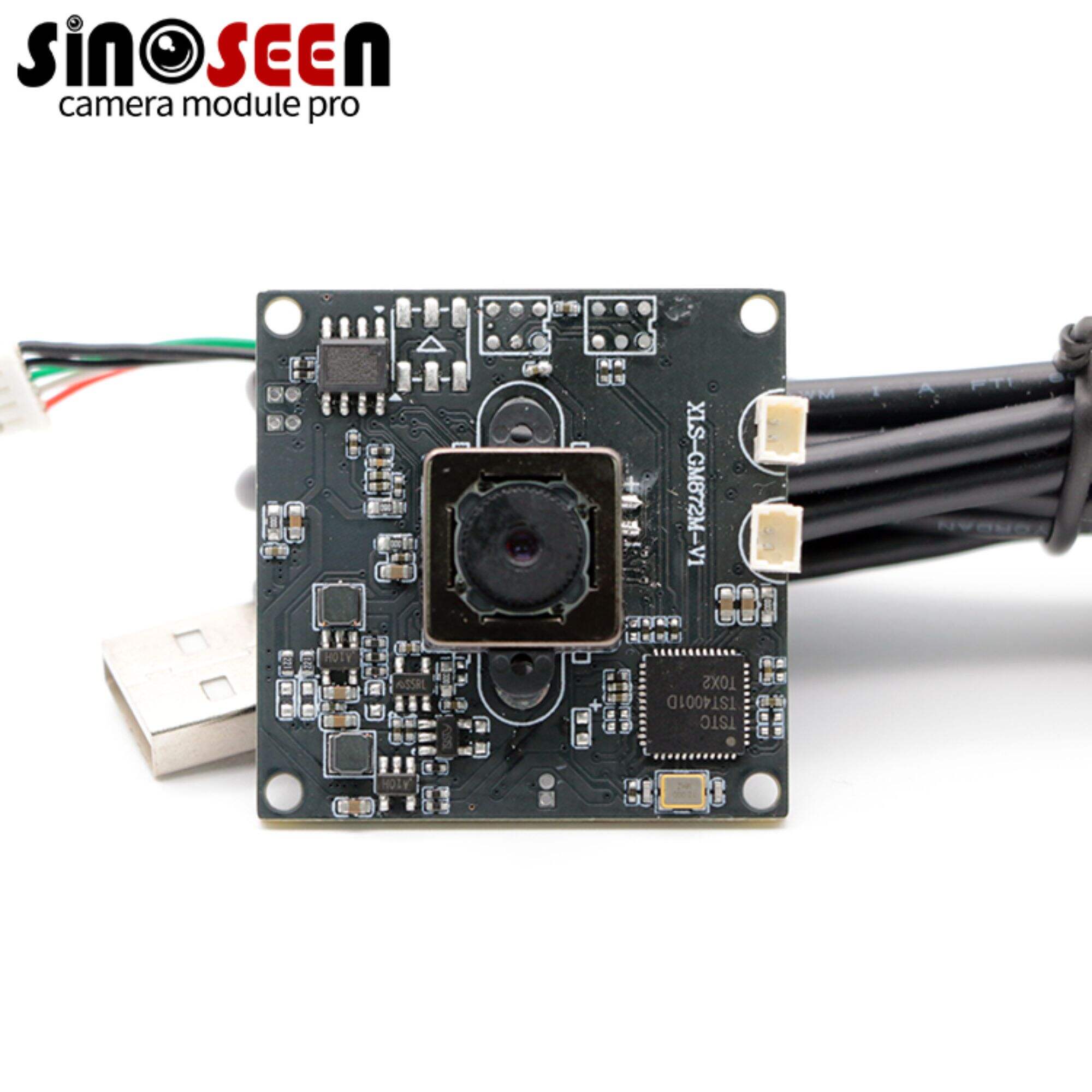

Latency can occur at any step in the process. Therefore, each step must be optimized in order to achieve a low-latency camera stream. This includes selecting high-performance camera module, using efficient video processing algorithms, choosing the right encoder, ensuring the stability and efficiency of the network connection, and optimizing the decoding process.

In addition, there are techniques that can further reduce latency, such as using more advanced compression algorithms to reduce the data size or employing specialized low-latency streaming protocols.

What are the factors that affect low-latency camera streaming?

Implementing low-latency camera streaming is no easy task; it requires a deep understanding and careful optimization of the multiple factors that affect its performance. Here are some of the factors that have a significant impact on the performance of low latency camera streaming:

Bandwidth: Bandwidth is a key factor in determining data transfer rates. High-bandwidth networks are able to transfer large amounts of data faster, thereby reducing latency. In low-latency camera streaming, it is important to ensure that there is enough bandwidth to handle the transmission of video data, especially in high-resolution and high-frame-rate video streams.

Connectivity: Connectivity relates to the method and medium of data transmission, such as fiber optics, wide area network (WAN), Wi-Fi, and so on. Different connectivity methods have different transmission rates and stability. For example, using a GMSL (Gigabit Multimedia Serial Link) camera provides a low latency rate over a single coaxial cable, which is especially suitable for embedded cameras that are 15 to 20 meters away from the host processor.

Distance: The impact of geographic distance on low latency streaming cannot be ignored. The longer the distance over which data is transmitted, the greater the delay of the signal during transmission. Therefore, the physical distance between the camera and the data processing center needs to be considered when designing the system.

Encoding: Encoding is a critical step in the video streaming process, which affects the size and transmission efficiency of the video data. In order to achieve low-latency camera streaming, an encoder that matches the video streaming protocol must be selected and optimized to reduce latency in the encoding process.

Video format: The size of the video file directly affects the latency when transmitting over the network. The larger the file, the longer it takes to transmit, thus increasing latency. Therefore, optimizing the size of the video file is one of the effective ways to reduce latency. However, this requires finding the right balance between video quality and file size.how to selecting H.264 or H.265 format can view this article.

By carefully optimizing and managing these factors, the performance of low-latency camera streams can be significantly improved, thus providing users with a smoother and real-time video experience.

What are the embedded vision applications that rely on low-latency camera streaming?

Video Conferencing

In the current context of increasing popularity of remote work and online education, low-latency camera streams have a direct impact on the smoothness and interactivity of videoconferencing communications. High latency can cause conversations to appear out of sync, affecting the transmission and reception of information, thus reducing the efficiency of meetings and the learning experience.

Remote Medical Monitoring

Low-latency camera streams are critical for remote patient monitoring and diagnosis. Doctors and nurses can use these systems to monitor a patient's vital signs and health status in real time so they can make timely treatment decisions. Any delay can lead to misdiagnosis or treatment delays, posing a threat to the patient's life.

Low-latency technology is critical to ensure a smooth, real-time video experience. Whether it's in video conferencing, remote medical monitoring, quality inspection, autonomous vehicle control or security surveillance, low latency camera streaming plays an integral role.

If you have any need for low latency camera streaming, please feel free to contact us, Sinoseen has more than 14 years of experience in designing customizable cameras that can provide a good low latency solution for your embedded vision applications.

EN

EN

AR

AR

DA

DA

NL

NL

FI

FI

FR

FR

DE

DE

EL

EL

HI

HI

IT

IT

JA

JA

KO

KO

NO

NO

PL

PL

PT

PT

RO

RO

RU

RU

ES

ES

SV

SV

TL

TL

IW

IW

ID

ID

SR

SR

VI

VI

HU

HU

TH

TH

TR

TR

FA

FA

MS

MS

IS

IS

AZ

AZ

UR

UR

BN

BN

HA

HA

LO

LO

MR

MR

MN

MN

PA

PA

MY

MY

SD

SD